Leadership & Project Management

Tuesday, May 29, 2012

Wednesday, May 16, 2012

Myself study on BI - Business Intelligence (TBC)

Business

Intelligence Research

I.

ETL Testing

A.

As Informatica Product Specification

1.

Source and Destination Loading one-one (no

transformation)

2.

Source and Destination Loading with

transformation

·

Using pre-built operators in Informatica Product

to build transformation, there is no need of programming skill

3.

Production Validation: validate if the source

data loaded into Production environment correctly

B.

As GeekInterview.com

Testing covers:

1.

Data validation within Staging to check all

·

Mapping Rules

·

Transformation Rules

2.

Data validation within Destination to check if

·

Data is present in required format

·

There is no data loss from Source to Destination

C.

Data-centric Testing

Applied specific to

ETL processes where data movement happens

1.

Technical

Testing: Technical testing ensures that the data copied, moved or loaded

from the source system to target system correctly and completely. Technical

testing is performed by comparing the target data against the source data. List

of testing techniques:

a.

Checksum

Comparison: to check if quantitative information of both source and

destination database is the same using Checksum technique. For example: number

of records from source database compared to destination; ACCUMULATED

information on source database compared to CALCULATED information on

destination database, eg: summarized annual data for monthly salaries in the

source database (total salaries of months

of all employees) causes new column in destination database to contain sum

of monthly salaries paid within a year for each year (total salaries of years of all employees). These two values should

be equal

b.

Domain

Comparison: Compare list of unique entries (field-unique of records) in

source database to unique entries in destination database. For example: List of

Employee Name in the Salary table of source database to List of Employee Name

in the destination database; like Dictionary List

c.

Multi-value

Comparison: Similar to Domain/Dictionary/List comparison, multi-value

comparison compares the WHOLE record or the CRITICAL columns between source and

destination database, and MATCHING between these columns. For example: Domain

comparison reports correctness of Employee List between source, destination

database; Checksum comparison reports correctness of Salary entries between

source and destination database; but these comparisons not guarantee the

correctness of assigning Salary entry to Employee entry (MATCHING between

columns). Multi-value comparison discovers such issues by comparing the key

columns/attributes of each record between source and destination database

2.

Business

Testing: To validate business common senses, eg: Salary/Commission cannot

be less than zero. There is a list of

exhaustive rules to test against, and it depends on domain knowledge, industry.

Need

research list of best practices

3.

Reconciliation:

Ensures that the data in the destination database is in agreement with the

overall system requirements. Examples of how the reconciliation helps in

achieving high quality data:

a.

Internal reconciliation: the data within the destination

database gets compared against each other (mostly in business constraint

terms), eg: number of shipments always less than or equal to number of orders,

otherwise it’s invalid

b.

External reconciliation: the data within the

destination database gets compared to other (external) system, eg: Number of

Employees in the destination database cannot be larger than Number of Employees

in the HR Employee Master System

Source: http://etlguru.com/blog/?p=172

II.

ETL Implementation Strategy

A.

Suggested Strategy by ETLGuru.com

1.

Theory

·

Every time there is a movement of data, there is

a need of data validation

·

There are various of test conditions during

migration from DEV to QA, QA to PRODUCTION

2.

Practice

·

A better ETL strategy is to store all the

BUSINESS RULES into centralized tables, even in source for target system, these

rules can be in SQL text.

·

This is a kind of repository that can be called

from any ETL processes, auditors at any phase of project life cycle. There is NO

need to re-think, re-write the rules

·

Any or all of these rules can be made OPTIONAL,

TOLERANCE can be defined, CALLED immediately after process runs or data can be

audited at leisure

·

This data validation/auditing system basically

contains:

a. The

tables contain the rules

b.The process to call

dynamically

c. The

tables to store results from the execution of the rules

·

Benefits

a. Rules

can be added dynamically with no change to ETL code

b.Rules are stored dynamically

c. Tolerance

level can be changed with ever changing to ETL code

d.Business Rules can be added

or validated by Business Expert without worrying about ETL code

·

This practice can be applied to ETL tools,

Databases: Informatica, DataStage, SyncSort DMExpress, Sunopsis, Oracle,

Sybase, SQL Server Integration (SSIS)/DTS,

Source: http://etlguru.com/blog/?p=22

III.

ETL Architecture Design

A.

Study shows:

There are 3 proposed layers:

1.

Layer 1: Data relational: extract, transform,

load from source to destination

2.

Layer 2: Control, Log, Security and

Authorization of ETL processes, organize and call sub-processes

3.

Layer 3: Manage and Schedule ETL processes,

Recovery from Failure, Load Balancing, etc.

B.

Incremental Loading Design

1.

Change Data Capture: there are 3 main approaches

·

Log-based CDC

·

Audit columns

·

Calculation of snapshot differentials

2.

F

IV.

Advanced (mostly for Large Scalable

Database/Volume)

A.

MapReduce

B.

Hadoop

C.

A Highly Scalable Dimensional ETL Framework

based on MapReduce

Tuesday, May 1, 2012

Websites/tools supporting Regular Expression check & generate

During working with .htaccess file (RewriteRule) I found these tools useful for checking & generating RegExpression

http://martinmelin.se/rewrite-rule-tester/

http://htaccess.madewithlove.be/

http://regexpal.com/

http://txt2re.com/index.php3?s=http://localhost:8282/images/dvd/index.php&-8&-16

http://gskinner.com/RegExr/

Monday, April 23, 2012

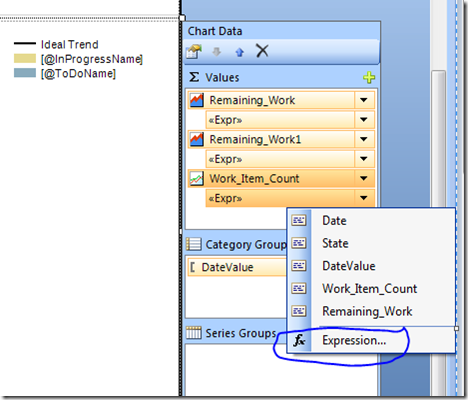

Create Burndown chart & exclude weekends in TFS

Our team starts to use TFS for managing Product Backlog, and this is the first time I work on TFS Burndown chart. Google around and I found this page to help us configure Burndown chart to exclude weekend (By default, TFS keeps weekend in the Burndown chart, so it doesn't reflect correctly your team effort). This makes sure your Burndown chart works correctly with any length of sprint (2 weeks or more)

Steps:

Add code below to Category Group to exclude weekend

Select the filter tab and enter the following : Filter Expression =Weekday(Fields!DateValue.Value,0) Type Integer (to the right of the fx button) Operator < Value 6The pop-up Window should like this Then add this code into Report (right click into the Report, select Properties, then select Code)

' Define other methods and classes here Function NumberOfDaysToAdjustForWeekends(ByVal valNow as Date, ByVal startOfSprint as Date) AS Int32 Dim valCurrent As Date = startOfSprint Dim weekendDaysPassed As Int32 = 0 Do While valCurrent < valNow valCurrent = valCurrent.AddDays(1) If (valCurrent.DayOfWeek = DayOfWeek.Saturday Or valCurrent.DayOfWeek = DayOfWeek.Sunday) Then weekendDaysPassed = weekendDaysPassed + 1 End If Loop Return weekendDaysPassed End FunctionFinally, add code below into "Work_Item_Count"'s expression

=Code.Burndown ( Sum(Fields!Remaining_Work.Value), Fields!DateValue.Value.AddDays(-Code.NumberOfDaysToAdjustForWeekends(Fields!DateValue.Value, Parameters!StartDateParam.Value)), Parameters!EndDateParam.Value.AddDays(-Code.NumberOfDaysToAdjustForWeekends(Parameters!EndDateParam.Value, Parameters!StartDateParam.Value)))Source: http://2e2ba.blogspot.com.au/2011/08/tfs-scrum-templates-and-burndown-report.html

Saturday, March 31, 2012

Links to follow for digital art works and marketing

Actually, these links were noted long time ago, when I worked for digital marketing firm. anyway, it's worth for following up in spare time

News related to Digital Marketing in Asia Pacific

http://www.campaignsingapore.sg/Category/459,digital.aspx

Art links

Deviant Art http://browse.deviantart.com/designs/web/

Zidean Art http://zidean.com/

Digital news

Digital News http://www.campaignasia.com/digital/news/443,101.aspx

Digital Case Studies http://www.campaignasia.com/digital/case-studies/443,99.aspx

Digital Works http://www.campaignasia.com/digital/the-digital-work/443,108.aspx

Digital campaign

http://yahoo.com.vn

http://zing.vn

http://coca-cola.zing.vn

http://forum.mysamsung.vn/forumdisplay.php?f=13

News related to Digital Marketing in Asia Pacific

http://www.campaignsingapore.sg/Category/459,digital.aspx

Art links

Deviant Art http://browse.deviantart.com/designs/web/

Zidean Art http://zidean.com/

Digital news

Digital News http://www.campaignasia.com/digital/news/443,101.aspx

Digital Case Studies http://www.campaignasia.com/digital/case-studies/443,99.aspx

Digital Works http://www.campaignasia.com/digital/the-digital-work/443,108.aspx

Digital campaign

http://yahoo.com.vn

http://zing.vn

http://coca-cola.zing.vn

http://forum.mysamsung.vn/forumdisplay.php?f=13

Sunday, February 26, 2012

Website to look for download

I had a collection of website to look for download, but i lost it, i will find it out and update again

http://www.filemirrors.info/

http://runamux.net/search/view/file/bms4OXpc/xcode_42_and_ios_5_sdk_for_sno

Want to download ebook?

http://forum.sachdoanhtri.com/forum/forum.php?mod=viewthread&tid=122&extra=page%3D1

http://www.svktqd.com/forum/showthread.php?t=84818

http://www.filemirrors.info/

http://runamux.net/search/view/file/bms4OXpc/xcode_42_and_ios_5_sdk_for_sno

Want to download ebook?

http://forum.sachdoanhtri.com/forum/forum.php?mod=viewthread&tid=122&extra=page%3D1

http://www.svktqd.com/forum/showthread.php?t=84818

Monday, February 13, 2012

Interesting tools for PHP code metrics, detectors

http://stackoverflow.com/questions/5611736/calculate-software-metrics-for-php-projects

PHP Depend generates some nice metrics for you.

Here are those I can think about :

phpcpd -- copy-paste detector

phploc -- to do many kind of counts (lines, classes, ...)

PHP_CodeSniffer -- to check if your code respects your coding standards.

phpmd -- mess detector

phpDocumentor / DocBlox to generate documentation (and detect what is not properly documented)

In PHP, those tools are generally not used from Eclipse, but integrated in some Continuous Integration Plateform.

About those, you can take a look at :

phpUnderControl -- was used a lot a couple of years ago -- it has less success now

Jenkins (More or less a fork of Hudson)

And, for that one, see Template for Jenkins Jobs for PHP Projects

Still, if you want to integrate some of those tools with Eclipse PDT, you might want to take a look at PHP Tool Integration.

See our PHP CloneDR for a tool that computes the amount and the precise location of duplicated code. It will find duplicates in spite of reformatting the text, modification of comments, and modifications (up to a degree of [dis]similarity.

There's an example of Joomla processed by the PHP CloneDR at the link.

PHP Depend generates some nice metrics for you.

Here are those I can think about :

phpcpd -- copy-paste detector

phploc -- to do many kind of counts (lines, classes, ...)

PHP_CodeSniffer -- to check if your code respects your coding standards.

phpmd -- mess detector

phpDocumentor / DocBlox to generate documentation (and detect what is not properly documented)

In PHP, those tools are generally not used from Eclipse, but integrated in some Continuous Integration Plateform.

About those, you can take a look at :

phpUnderControl -- was used a lot a couple of years ago -- it has less success now

Jenkins (More or less a fork of Hudson)

And, for that one, see Template for Jenkins Jobs for PHP Projects

Still, if you want to integrate some of those tools with Eclipse PDT, you might want to take a look at PHP Tool Integration.

See our PHP CloneDR for a tool that computes the amount and the precise location of duplicated code. It will find duplicates in spite of reformatting the text, modification of comments, and modifications (up to a degree of [dis]similarity.

There's an example of Joomla processed by the PHP CloneDR at the link.

Subscribe to:

Posts (Atom)